Introduction

Many cognitive paradigms rely on active decision-making, often combined with extended training periods in which subjects learn to respond to arbitrary stimuli. As a result, these paradigms can create participation biases (e.g. subjects may lack motivation to participate in the training) and once-learned contingencies may bias the outcomes of subsequent similar tests (Harlow, 1949; Rivas-Blanco et al., 2023). In particular, some species, such as prey animals, might show a hampered motivation to engage in decision-making tasks due to an increased alert behaviour in a test situation where individuals are typically isolated from the rest of the group for a short period of time. Active decision-making tasks may therefore be inappropriate in some specific contexts if the goal is to test for the population-wide distribution of cognitive traits in a species or to make adequate cross-species comparisons.

Looking time paradigms (Winters et al., 2015; experimental setups in which visual stimuli are presented to a subject and its corresponding visual attention to each stimulus is measured, see Wilson et al., 2023) were originally developed for research on the perception of preverbal human infants (Berlyne, 1958; Fantz, 1958) and have since been increasingly used in animal behaviour and cognition research, especially in non-human primates (e.g. Krupenye et al., 2016; Leinwand et al., 2022). One prominent experimental approach of the looking time paradigm, next to habituation- and violation-of-expectation-tasks, is the visual preference task (for a critical discussion of the term ‘visual preference’ see Winters et al., 2015). In this experimental setup, visual stimuli are presented either simultaneously or sequentially and a subject’s preference for a particular stimulus is assessed by measuring its visual attention to each stimulus (Steckenfinger & Ghazanfar, 2009; Racca et al., 2010; Méary et al., 2014; Leinwand et al., 2022). One of the main assumptions of the visual preference task is that animals direct their visual attention for longer to objects or scenes that are perceived to be more salient to them, or that elicit more interest (Winters et al., 2015). An increased interest in specific stimuli can have multiple reasons, such as the perception of increased attractiveness or threat, novelty or familiarity (Wilson et al., 2023). However, the underlying motivation to show increased interest in a stimulus is often difficult to assess, as multiple motivational factors can simultaneously occur (for a critical discussion of the interpretation of the looking behaviour see Wilson et al., 2023). Visual preference tasks do not require intensive training of learned responses, are relatively fast to perform and provide a more naturalistic setup compared to many decision-making tasks (Racca et al., 2010; Wilson et al., 2023). Looking time paradigms might be particularly valuable for assessing socio-cognitive capacities such as individual discrimination and recognition, as social stimuli often have a higher biological relevance compared to artificial and/or non-social stimuli and may therefore elicit a stronger behavioural response.

Individual recognition refers to a subset of recognition that occurs when one organism identifies another according to its unique distinctive characteristics (Tibbetts & Dale, 2007). This process may be important in an animal’s social life as an animal that recognises another individual, thus also recognises the sex and social status of a familiar group member, an unfamiliar out-group conspecific or even the heterospecific status of other animal species (Coulon et al., 2009). To achieve visual individual recognition, many animal species rely on the process of face recognition (e.g. paper wasps (Polistes fuscatus): Tibbetts, 2002; cichlid fish (Neolamprologus pulcher): Kohda et al., 2015; cattle (Bos taurus): Coulon et al., 2009; sheep (Ovies aries): Kendrick et al., 2001).

In social situations in which fast decision-making is required, it may be advantageous to use social categories rather than relying on individual features. These categories are established through social recognition, defined as the capability of individuals to categorise other individuals into different classes, e.g. familiar vs. unfamiliar, kin vs. non-kin, or dominant vs. subordinate (Gheusi et al., 1994). Categorising individuals can simplify decision-making in complex social environments by reducing the information load (Zayan & Vauclair, 1998; Ghirlanda & Enquist, 2003; Lombardi, 2008; Langbein et al., 2023). Therefore, social recognition might be considered a cognitive shortcut for decision-making. The capability to differentiate between other individuals in two-dimensional images based on social recognition has been shown in several non-human animals (e.g. great apes: Leinwand et al., 2022; capuchin monkeys (Cebus apella): Pokorny & de Waal, 2009; horses (Equus caballus): Lansade et al., 2020; cattle: Coulon et al., 2011; sheep: Peirce et al., 2001).

Like many ungulate species, goats are highly vigilant prey animals that rely strongly on their visual sense and auditory sense to detect predators (Adamczyk et al., 2015). As feral goats live in groups with a distinct hierarchy (Shank, 1972), it is likely that they can tell familiar and unfamiliar conspecifics apart (Keil et al., 2012). Goats also show sophisticated social skills, e.g. the ability to follow the gaze direction of a conspecific (Kaminski et al., 2005; Schaffer et al., 2020). It can be assumed that paying attention to conspecific head cues may play an important role in a goat’s social life as they use head movements to indicate their rank in the hierarchy (Shank, 1972). Goats have also been shown to attribute attention to humans (Nawroth et al., 2015), follow their gaze (Schaffer et al., 2020) and prefer to approach images of smiling humans over images of angry humans (Nawroth & McElligott, 2017), indicating high attention to human facial features. These characteristics make them an ideal candidate species for answering questions regarding their socio-cognitive capacities using looking time paradigms.

In this study, we tested whether a looking time paradigm can be used in dwarf goats to answer biological questions, in this case whether they are capable of spontaneously recognising familiar and unfamiliar con- and heterospecific faces when being presented as two-dimensional images. To do this, we presented the subjects with a visual preference task in which the visual stimuli were presented sequentially and analysed the looking behaviour towards each stimulus. We hypothesised that goats attribute their visual attention to suddenly appearing objects in their environment (H1). We therefore predicted that our subjects would pay more attention (i.e. higher looking durations) to a video screen presenting a stimulus compared to a white screen (P1). Moreover, we hypothesised that goats show different behavioural responses to two-dimensional images of conspecific compared to images of heterospecific faces, irrespective of familiarity (H2). The preference for looking at conspecifics compared to heterospecifics has been shown in primates (Fujita, 1987; Demaria & Thierry, 1988; but see Tanaka, 2007 for an effect in the opposite direction; Kano & Call, 2014). Sheep, a ruminant species closely related to goats, also preferred conspecific compared to human images when faced with a discrimination task in an enclosed Y-maze (Kendrick et al., 1995). We therefore predicted that the goats in our study would pay more attention (i.e. higher looking durations) to conspecific compared to heterospecific faces, showing a visual preference for conspecific stimuli (P2). We also hypothesised that goats are able to spontaneously recognise familiar and unfamiliar con- and heterospecifics when being presented with their faces as two-dimensional images (H3). The capability to differentiate between familiar and unfamiliar individuals has been demonstrated in several domestic animal species, e.g. llamas (Lama glama) (Taylor & Davis, 1996; real humans as stimuli), horses (Lansade et al., 2020; photographs of human faces), cattle (Coulon et al., 2011; photographs of cattle faces) and sheep (Peirce et al., 2000, photographs of sheep faces; Peirce et al., 2001, photographs of human faces). Therefore, we predicted that the subjects in our study would show differential looking behaviour depending on the familiarity of the presented individuals. In particular, we expected that goats would show a visual preference (i.e. higher looking durations) for unfamiliar compared to familiar heterospecific stimuli (see Leinwand et al., 2022; Thieltges et al., 2011 for this preference in great apes and dolphins (Tursiops truncatus)), and for familiar compared to unfamiliar conspecific stimuli (see Coulon et al., 2011 for this preference in cattle), resulting in a statistical interaction between the species displayed in the stimuli and the displayed individual’s familiarity to our study subjects (P3). We also explored goats’ ear position (forward, backward, horizontal, others) during stimulus presentation as ear position has been speculated as being an indicator for differences in arousal and/or valence in goats (Briefer et al., 2015; Bellegarde et al., 2017).

Animals, Materials and Methods

Ethical note

The study was waived by the State Agency for Agriculture, Food Safety and Fisheries of Mecklenburg-Vorpommern (Process #7221.3-18196_22-2) as it was not considered an animal experiment in terms of sect. 7, para. 2 Animal Welfare Act. Animal care and all experimental procedures were in accordance with the ASAB/ABS guidelines for the use of animals in research (ASAB Ethical Committee/ ABS Animal Care Committee, 2023). All measurements were non-invasive and the experiment did not last longer than ten minutes per day for each individual goat. If the goats had shown signs of a high stress level, the test would have been stopped.

Subjects and Housing

Two groups of non-lactating female, one to two years old, Nigerian dwarf goats (group A: 6 subjects, mean age ± SD: 688.2 ± 5.2 d at the start of testing; group B: 6 subjects, 472.2 ± 1.2 d at the start of testing) reared at the Research Institute for Farm Animal Biology (FBN) in Dummerstorf participated in the experiment. The animals had previously participated in an experiment with an automated learning device (Langbein et al., 2023) at an earlier age (groups A and B) and in an experiment on prosocial behaviour in goats (unpublished data; group A). Each group was housed in an approximately 15 m2 (4.8 m x 3.1 m) pen consisting of a deep-bedded straw area (3.1 m x 3.1 m) and a 0.5 m elevated feeding area (3.1 m x 1.5 m). Each pen was equipped with a hay rack, a round feeder, an automatic drinker, a licking stone, and a wooden podium for climbing. Hay and food concentrate were provided twice a day at 7 am and 1 pm, while water was offered ad libitum. Subjects were not food-restricted during the experiments.

Experimental arena and apparatus

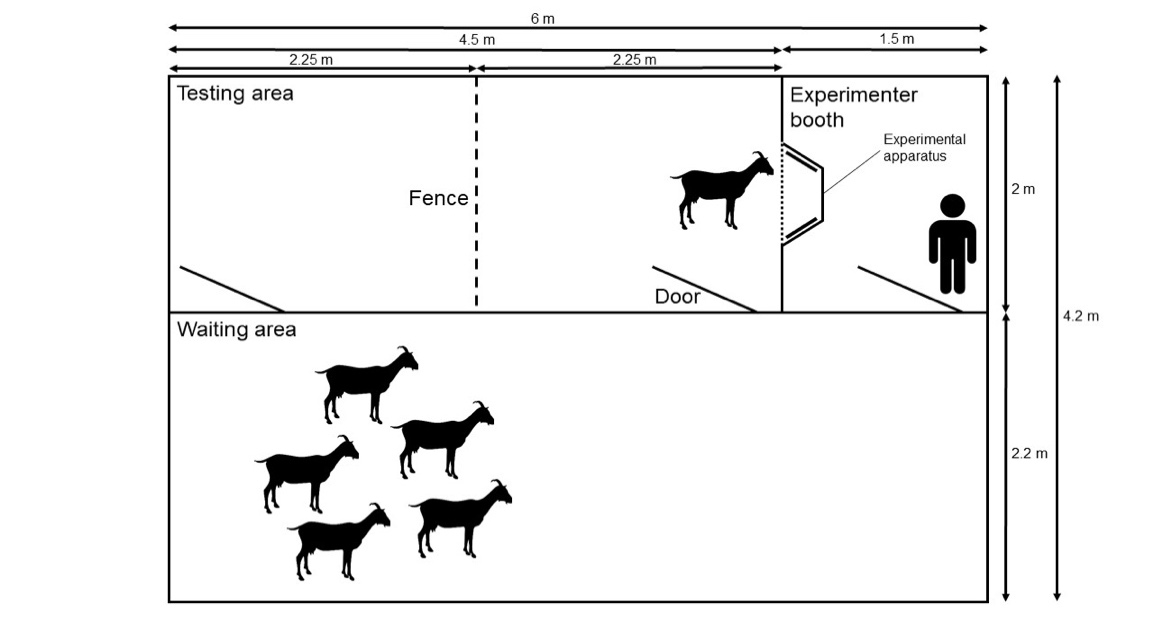

The experimental arena was located next to the two home pens. It consisted of three adjoining rooms with 2.1 m high wooden walls connected by doors (Fig. 1). Data collection took place in a testing area (4.5 m x 2 m) divided into two parts (2.25 m x 2 m) by a fence that facilitated the separation of single subjects from the rest of the group. The experimental apparatus was inserted into the wall between the testing area and the experimenter booth (2 m x 1.5 m), which was located behind the apparatus and where an experimenter (E1) was positioned during all sessions. The subject in the testing area had no visual contact with E1. Between the different sessions of data collection subjects remained in an adjacent waiting area (6 m x 2.2 m).

Figure 1 - Scheme of the experimental arena, including the testing area, the experimenter booth, the waiting area and the experimental apparatus.

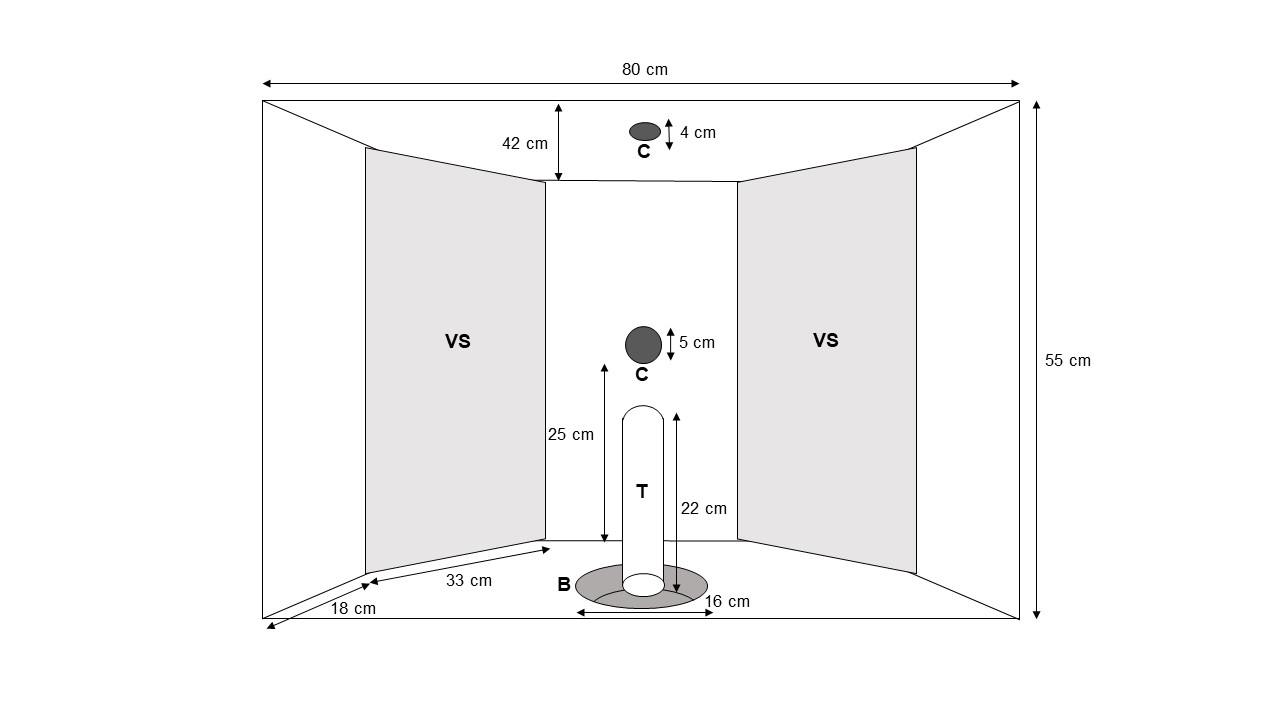

The experimental apparatus (Fig. 2) was inserted into the wall between the testing area and the experimenter booth at a height of 36 cm above the floor and consisted of two video screens (0.55 m x 0.33 m) mounted on the rear wall of the apparatus. The video screens were positioned laterally so that they were angular (around 45°) to a subject standing in front of the apparatus. Subjects standing in front of the apparatus were considered to look approximately at the centre of the screens. Two digital cameras were installed: one (AXIS M1135, Axis Communications, Lund, Sweden) on the ceiling provided a top view of the subject, and one (AXIS M1124, Axis Communications, Lund, Sweden) on the wall separating the two video screens provided a frontal view of the subject. Videos were recorded with a 30 FPS rate. A food bowl, connected to the experimenter booth by a tube, was inserted into the bottom of the apparatus. This allowed E1 to deliver food items without being in visual contact with the tested subject.

Figure 2 - Experimental apparatus with video screens (VS), cameras (C), food bowl (B) and tube (T).

Habituation

The experiment required the handling of the animals by the experimenters (E1 and E2). To this end, they entered the pen, talked to the animals, provided food items (uncooked pasta), and, if possible, touched them. The experimenters stayed in the pen for approximately 30 minutes daily for twelve days (group A) and eleven days (group B) until each of the animals remained calm when the experimenters entered the pen and could be hand-fed.

After this home pen habituation period, the animals were introduced as groups to the experimental arena for approximately 15 minutes per day. On the first two days of this habituation phase, the subjects were allowed to move freely between the waiting area and the testing area, and food was provided in the whole arena. On the third day, the doors between the two areas were temporarily closed and food was provided only at the experimental apparatus with E1 sitting in the experimenter booth and inserting food through the tube into the food bowl, while E2 remained with the animals in the testing area. The video screens of the experimental apparatus were turned off on the first two days of the habituation phase and then turned on only showing white screens. Group habituation lasted for ten sessions for both groups. After these ten sessions, all animals remained calm in the experimental arena, fed out of the food bowl in the experimental apparatus, and were thus transferred to the next habituation phase.

In the next habituation phase, all goats were transferred to the experimental arena but only two subjects were introduced to the testing area while the other four group members remained in the waiting area to maintain acoustic and olfactory contact. Each pair was provided with 20 food items over a period of 5 min via the tube connecting the food bowl in the apparatus and the experimenter booth. Subjects were immediately reunited with the rest of the group after the separation. Optimal subject groupings were identified over time, as some subjects showed signs of stress when separated in the pair setting. This habituation phase took ten sessions for group A and 14 sessions for group B. After this phase, all animals remained calm in the pair setting, fed out of the food bowl in the experimental apparatus, and were thus transferred to the next habituation phase.

Finally, subjects were habituated alone for approximately 3 min per day, using the same procedure as for the pair habituation, except that only 10 food items were provided via the tube connecting the food bowl in the apparatus and the experimenter booth. This habituation phase took 5 sessions for both groups. Two subjects showed signs of a high stress level (e.g. loud vocalisations, restless wandering, and rejection of feed uptake) during the habituation and were therefore excluded from the experiment. The remaining ten subjects that stayed calm in the testing area and fed out of the food bowl proceeded to the experimental phase during which one subject needed to be excluded at a later stage as it began to show indicators of high stress.

Experimental procedure

Stimuli and stimulus presentation

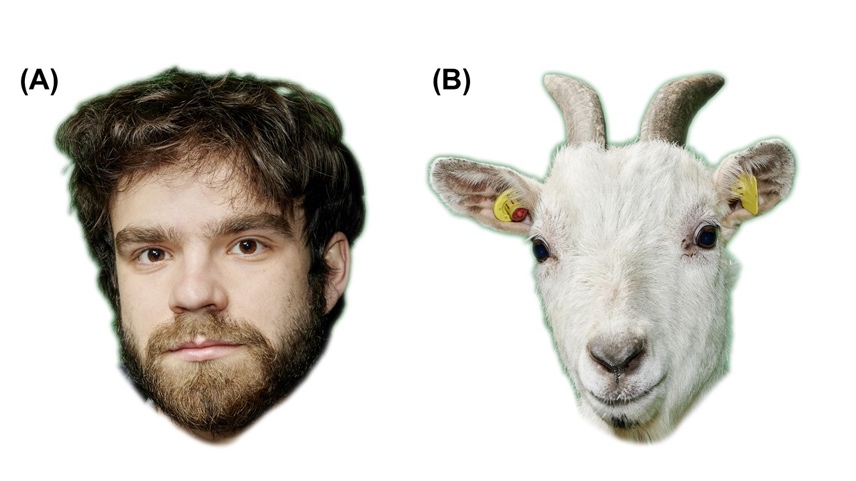

In this experiment, photographs of human and goat faces were used as stimuli. A professional photographer took pictures of the individual goats from both groups and also of four humans, two being familiar to the goats (E1 and E2) and two being unfamiliar to the goats. Familiar humans had almost daily positive interactions with the animals (feeding them with dry pasta, if possible touching and gently stroking them) during the habituation phase over at least three months (once a day, five days per week). Familiar and unfamiliar humans were matched for sex (one female, one male each). Each face was photographed in two slightly different orientations: the human faces were rotated slightly to the left and right, and the goat faces were photographed in two different head orientations, provided that both eyes were visible (Fig. 3). This was done to increase the variability of the provided stimuli. Additionally, each picture was tested for its brightness (ImageJ 1.53m, Wayne Rasband and contributors, National Institute of Health, USA, https://imagej.net, Java 1.8.0-internal (32-bit)) and its size (Corel® Photo-Paint X7 (17.1.0.572), © 2014 Corel Corporation, Ottawa, Canada). No difference was found between the goat faces and the human faces with respect to brightness (goats: 231.66 ± 6.1 (mean ± SD), humans: 225.91 ± 6.44), but the two stimulus categories varied regarding size (goats: 46092.06 ± 2655.86 px (mean ± SD), humans: 59317.5 ± 2260.65 px). The stimuli were presented as approximately life-sized, in colour, and with a white background. Images were presented either on the left or on the right screen while the other screen remained white. Each test session consisted of a stimulus set of five slides. An initial white slide started the set followed by four slides with a stimulus on either the left or the right side. Four stimulus sets showed human faces and 16 stimulus sets showed goat faces. Each of these sets contained pictures of two familiar and two unfamiliar goats/humans with each goat/human presented only once. The human images were the same for all subjects, while the goat images varied as an individual goat was not allowed to see its own picture as a stimulus. The stimuli were presented on the video screens in a pseudorandomized and counterbalanced order.

Data collection

Data collection took place in May and June 2022. Testing started at 9:00 a.m. each day, and each subject completed eight sessions (4 consecutive sessions with goat stimuli, and 4 consecutive sessions with human stimuli with a switch of stimulus species between session 4 and 5) with one session per day. Group A was presented with the goat faces first, group B with the human faces. A session started when the subject was separated from the rest of the group and stood in front of the experimental apparatus. Prior to the stimulus presentation, one to two motivational trials were conducted in which a food item was inserted into the apparatus without any stimulus being presented for 10 seconds afterwards. Immediately before each stimulus presentation, a food item was inserted into the food bowl. The stimulus presentation lasted for 10 seconds. A test trial was followed by another motivational trial so that motivational trials and test trials alternated until all four stimuli of a set had been presented. The number of motivational trials varied depending on the behaviour of the subject and could be increased, e.g. if the animal was restless at the beginning of the session. Data from the subject that needed to be excluded after the fifth test session remained in the data set.

Figure 3 - Examples of the faces used as stimuli (A) familiar human and (B) goat (familiarity depended on the subject tested).

Data scoring and analysis

Video coding

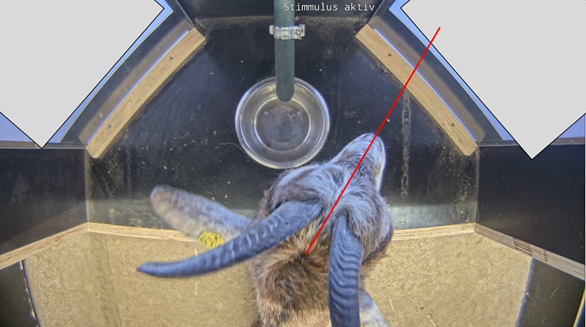

The behaviour of the individual goats was scored using Boris (Friard & Gamba, 2016, Version 7.13), an event logging software for video coding and live observations. For the video coding of the looking behaviour, the recordings from the camera providing a top view of the subject were used. Coding was performed in frame-by-frame mode and the researchers remained blind to the stimulus presentation by covering the video screens of the apparatus during coding. The first look was scored when the subject directed its gaze towards a video screen for the first time in a trial once the head was lifted from the food bowl. Besides the direction of the first look, the looking duration at each video screen was scored. To determine the direction in which the subject was looking, a fictitious line that extends from the middle of the snout (orthogonal to the line connecting both eyes) was drawn (Fig. 4). As this line would align with a binocular focus of the tested subject, it was used as an indicator of a goat directing its attention to a particular screen. The goat’s looking behaviour was not scored when the subject was not facing the wall of the testing area in which the apparatus was inserted because then it could not be ensured that it was actually paying attention to the presented stimulus. Video elements in which the goat’s face was not visible due to occlusion (e.g. when the subject was sniffing a video screen after moving into the apparatus with both forelegs) were not scored. There was no scoring when the subject’s snout was above its eye level because in this case it was assumed that it was looking at the ceiling of the apparatus and not at the video screens or the wall separating the two video screens. There was also no scoring when the subject’s snout was perpendicular to the bottom of the apparatus, as in this case it was assumed that the subject was sniffing the bottom of the apparatus with its sight also directed towards it rather than towards the video screens. Inter-observer reliability for the looking duration towards S+ was assessed in a previous stimulus presentation study using the same coding rules and was found to be very high (80 out of 200 trials (40%) of the videos were coded by two observers; Pearson correlation coefficient (r) = 0.96; p < 0.001).

Figure 4 - Image of the camera providing a top view of the apparatus during the stimulus presentation. Video screens were covered during the video coding to reduce potential biases during video coding. A fictitious line extending from the middle of the snout (red) was used in the blind coding for deciding which video screen the subject was looking at.

For the video coding of the ear positions during the stimulus presentation, which was also performed in frame-by-frame mode, recordings from the camera providing a frontal view of the subject were used. We scored four different ear positions (see Boissy et al., 2011; Briefer et al., 2015 for related scoring in goats and sheep): ears oriented forward (tips of both ears pointing forward), backward (tips of both ears pointing backward), horizontal (ear tips perpendicular to the head-rump-axis) and other postures (all ear positions not including the positions mentioned above, i.e. asymmetrical ears or the change between two ear positions). The ear positions were analysed for the entire ten seconds of stimulus presentation, regardless of whether the subjects were looking at the video screens. Video elements in which not both ears (or at least parts of both ears that allowed a precise determination of the ear positions) were visible, were not scored. There was no scoring when the ear position could not be clearly determined, i.e. unclear ear tip positions when the subject was standing further away, even though both ears were visible. Inter-observer reliability for the duration of ears in the respective positions was found to be high (32 out of 305 trials (11%) of the videos were coded by two observers; Pearson correlation coefficient (r) = 0.85; p < 0.001).

Statistical analysis

Statistical analysis was carried out in R (R Core Team, 2022, Version 4.2.2).

To assess whether subjects looked longer at one of the video screens, the mean looking duration at the video screen presenting a stimulus (S+) and the video screen without a stimulus (S-) for each subject were compared using a Wilcoxon signed-rank test (as data points were not normally distributed). Subsequently, it was analysed how often the first look (FL) was directed towards S+ or S- and the probability of the FL being directed towards S+ compared to S- was calculated (p). Additionally, the odds, representing how much more frequently the FL was directed towards the stimulus than towards the white display, were calculated as follows:

p / (1 – p)

Furthermore, four linear mixed-effects models (R package “blme”; Chung et al., 2013) were set up. The four respective response variables were “looking duration at S+” (out of the total of 10s of stimulus presentation), “Forward_Ratio” (time ears oriented forward divided by the summed-up durations of all four ear positions), “Backward_Ratio” (time ears oriented backward divided by the summed-up durations of all four ear positions) and “Horizontal_Ratio” (time ears oriented horizontal divided by the summed-up durations of all four ear positions).

For all models, we checked the residuals of the models graphically for normal distribution and homoscedasticity (R package “performance”; Lüdecke et al., 2021). To meet model assumptions, “looking duration at S+” was log-transformed and the trials in which “looking duration at S+” had a value of zero (n=17) were excluded as this was an indication that subjects might have been distracted. All models included “Stimulus species” (two levels: human, goat), “Stimulus familiarity” (two levels: familiar, unfamiliar) and “Testing order” (two levels: first human stimuli, first goat stimuli) as fixed effects. We also tested for an interaction effect including “Stimulus species” and “Stimulus familiarity”. Repeated measurements “Session” (1-8) per “Subject” (identity of the goat) were defined as nested effects. We followed a full model approach, i.e., we set up a maximum model that we present and interpret (Forstmeier & Schielzeth, 2011). First, we calculated the global p-value (between the maximum and null model) using parametric bootstraps (1,000 bootstrap samples, R package “pbkrtest”; Halekoh & Højsgaard, 2014). If that model reached a low p-value, we tested each of the predictor variables (including the interaction) singly by comparing the full model to the one omitting this predictor. P-values calculated with parametric bootstrap tests give the fraction of simulated likelihood ratio test (LRT) statistical values that are larger or equal to the observed LRT value. This test is more adequate than the raw LRT because it does not rely on large-sample asymptotic analysis and correctly takes the random-effects structure into account (Halekoh & Højsgaard, 2014). Moreover, it was tested whether there was an increase in the looking duration towards S+ between session 4 and session 5, due to a dishabituation effect in the subjects caused by the switch of the presented stimulus species. To achieve this, the mean looking durations towards S+ in both sessions were calculated for each subject and then compared by performing a paired t-test. Type 1 error rate was controlled at a level of p = 0.05 for all tests.

Results

Preference for S+ over S- regarding looking duration

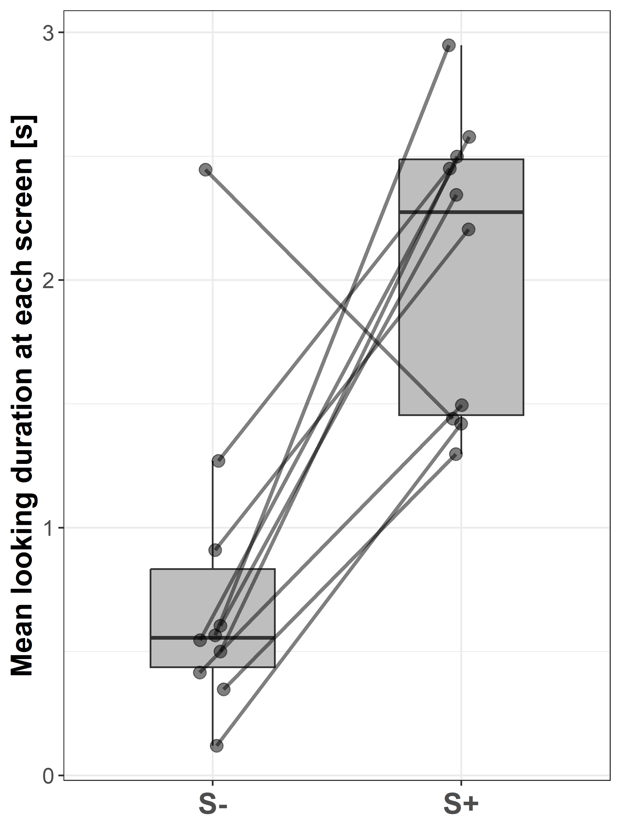

With their mean duration, subjects looked significantly longer at S+ (2.27 ± 1.03 s; median ± IQR) compared to S- (0.56 ± 0.4 s; Wilcoxon signed-rank test: V = 53; p = 0.006; Fig. 5).

Figure 5 - Boxplots showing the mean looking durations at the video screen without a stimulus (S-) and the video screen presenting a stimulus (S+) of all subjects across all trials. Lines indicate data points from the same individual.

Preference for S+ over S- regarding first look

In 264 of the 301 trials (86.6%) in which the animals were attentive to the video screens (4 trials were excluded in which the animals neither looked at the left nor the right video screen), the FL was directed towards S+. Therefore, the probability of the FL being directed towards S+ was six times more likely than towards S-.

Factors affecting looking duration at S+

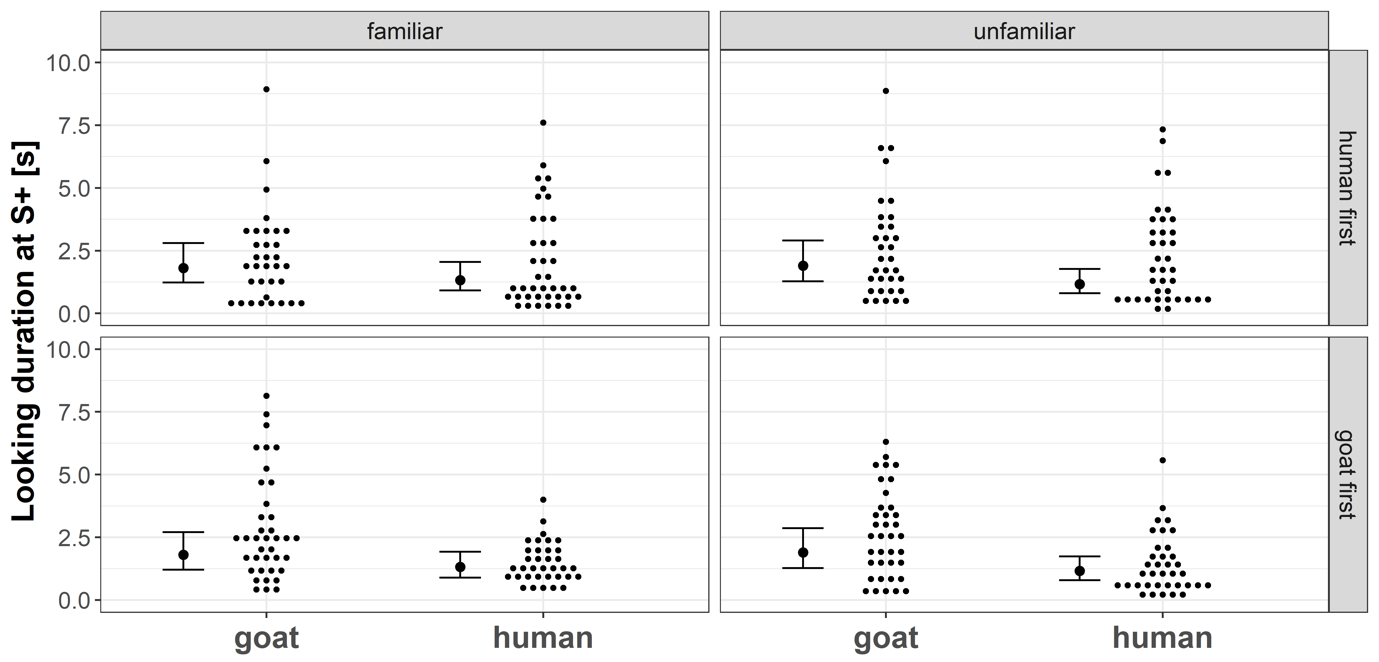

Regarding the looking duration model, we found no substantial interaction effect between the factors “Stimulus species” and “Stimulus familiarity” (p = 0.27). Across all test trials, goats looked longer at goat faces compared to human faces (p = 0.027, Fig. 6). The familiarity of the stimulus subject and the testing order did not substantially affect their looking duration at S+ (both p ≥ 0.48, Fig. 6).

Figure 6 - Small dots represent the looking duration at the video screen presenting a stimulus (S+) across species, familiarity, and testing order. Larger black dots are the corresponding model estimates for each condition, and thin black lines and whiskers are the 95 % confidence intervals of the maximum model (including the main effects and interactions).

Differences in looking duration when stimulus species switched (Session 4 vs. Session 5)

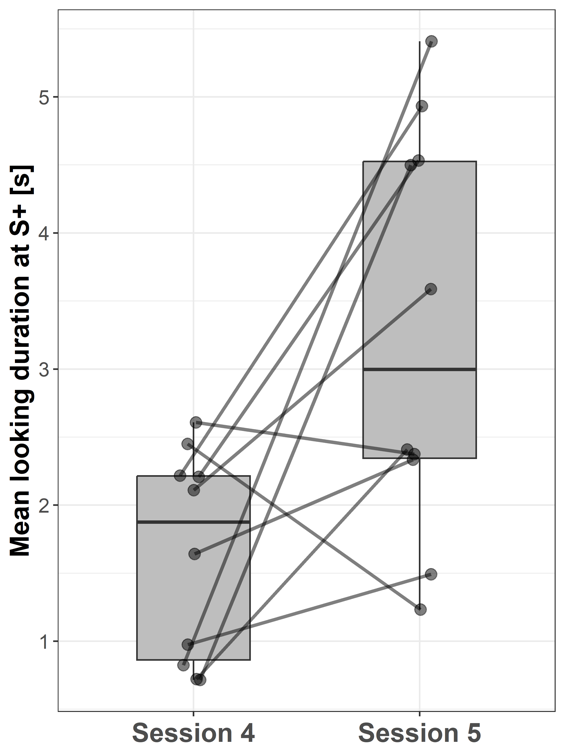

Subjects looked longer at S+ during session 5 (3.28 ± 1.5 s; mean ± SD) compared to session 4 (1.58 ± 0.77 s; paired t-test: t = -1.70; p = 0.014, Fig. 7) when the stimulus species switched from human to goat or vice versa.

Factors affecting ear positions during stimulus presentation

Regarding the ear position, none of the three models revealed a significant interaction effect between “Stimulus species” and “Stimulus familiarity” (all p ≥ 0.32). We found no statistically supported differences in the ratios of the three ear positions for the fixed factors “Stimulus species” (all p ≥ 0.57), “Stimulus familiarity” (all p ≥ 0.44) and “Testing order” (all p ≥ 0.61).

Figure 7 - Boxplots showing the mean looking durations at S+ in sessions 4 and 5 (stimulus switch from human to goat or vice versa) for all subjects. Lines indicate data points from the same individual.

Discussion

In this study, we tested whether a looking time paradigm can be used to answer questions on recognition capacities in dwarf goats, in this case whether goats are capable of recognising familiar and unfamiliar con- and heterospecific faces when being presented as two-dimensional images. To assess visual attention (via looking time) and arousal (via ear positions), we measured the goats’ looking behaviour towards the stimuli and their ear positions during the trial. Our results show that goats differ in their behavioural responses when presented with 2D images of either con- or heterospecifics, showing a visual preference for goat faces. However, their response did not differ between familiar and unfamiliar individuals (irrespective of species), suggesting that goats either cannot spontaneously assign social recognition categories to 2D images or are equally motivated to pay close attention to both categories (but for different reasons). These findings are partly in contrast to related research on goats and other domestic ungulate species (Coulon et al., 2011; Langbein et al., 2023) and thus raise questions about the comparability of test designs.

As predicted (P1), goats paid more attention to a video screen presenting a stimulus (S+) compared to a white screen (S-), supporting our hypothesis that goats attribute their visual attention to suddenly appearing objects in their environment (H1). Additionally, 86.6 % of the first looks were directed towards S+ compared to S-. These results indicate that the subjects were attentive with regard to the stimuli presented and therefore is good evidence that the design of our looking time paradigm is an appropriate experimental setup to address the visual sense of small ungulates.

As predicted (P2), subjects paid more attention to goat compared to human faces, supporting our hypothesis that goats show different behavioural responses to two-dimensional images of conspecific compared to heterospecific faces, irrespective of familiarity (H2). This aligns with Kendrick et al. (1995), who found that sheep preferred conspecifics over humans in a visual discrimination task, and with studies conducted with rhesus macaques (Fujita, 1987; Demaria & Thierry, 1988). There are several possible reasons why the goats in our study paid more visual attention to the conspecific stimuli. One possible explanation might be that conspecific stimuli may generally convey more biologically relevant information, such as the identity, sex, age, status in the hierarchy or even the emotional state of a conspecific. This principle should similarly apply to goats, given their highly social nature, either as an inherent trait or influenced by developmental factors. In our study, limited exposure to humans prior to the study might also have resulted in a bias towards conspecifics. It would therefore be interesting to see whether hand-reared goats would also show a conspecific bias. We cannot fully exclude that participating in other experiments might have influenced the behaviour of our subjects - especially as the subjects from our study participated in an experiment with an automated learning device with photographs being presented on a computer display. However, we never observed that our subjects showed the learned response from this previous experiment (using the video screen as a touchscreen with their snout to indicate a choice regarding a photograph) so that it can be considered less likely that our subjects have transferred their learned responses and associated behaviours to our study. Another possible reason for the observed visual preference for conspecific faces in goats might be that the sight of a conspecific might work as a stress buffer during the isolation in the test trials as has been shown for sheep when being isolated from their social group (Da Costa et al., 2004). Da Costa et al. (2004) tested whether sheep in social isolation would show reduced indications of stress when being presented with an image of a conspecific compared to images of goats or inverted triangles and found that seeing a conspecific face in social isolation significantly reduced behavioural, autonomic and endocrine indices of stress. As feral goats and sheep have comparable social structures it is reasonable to assume that images of conspecifics might likewise have positive effects on the tested subjects in our study. Additional assessment of stress parameters, such as heart rate (variability) or cortisol concentration, is recommended (see e.g. Da Costa et al., 2004).

Alternatively, a possible reason for the shorter looking durations at the human stimuli might be due to avoidance of the human face images, as the presented humans might be perceived as possible predators (Davidson et al., 2014). This might have led to behavioural responses aimed at reducing the time the human images can be observed, e.g. by moving away from the experimental apparatus. In sheep, human eye contact altered behaviour compared to no human eye contact, resulting in more locomotor activity and urination when being stared at, but no differences in fear-related behaviours, such as escape attempts (Beausoleil et al., 2006). This might imply that human eye contact can be interpreted as a warning cue for sheep (Beausoleil et al., 2006). Goats, in our study, might thus have simply avoided the human image (and gaze) rather than showing an active preference for goat images.

Additional support for H2 is provided by the finding that the subjects in our study also looked longer at the stimuli in session 5 compared to session 4 when the presented stimulus species was switched from human to goat or vice versa. This switch corresponds to a habituation-dishabituation paradigm. In this paradigm, a habituation stimulus is presented to the subject either for a long period or over several short periods (habituation period) and is then replaced by a novel stimulus in the dishabituation period (Kavšek & Bornstein, 2010). In habituation-dishabituation paradigms, the subject’s attention to the habituation stimulus is expected to decrease during the habituation period, but then to increase in the dishabituation period when a novel stimulus (that the subject is able to distinguish from the previous one) is presented (Kavšek & Bornstein, 2010). As our study found longer looking durations at the novel stimulus species compared to the old one, it can be assumed that the subjects noticed that the stimuli had changed and were therefore able to discriminate between conspecific and heterospecific stimuli. This additionally supports our primary findings regarding the capability to discriminate between con- and heterospecifics when presented as two-dimensional images.

Contrary to our third prediction (P3), we found no statistical support for differences in the looking behaviour with respect to the familiarity of the depicted individuals. Consequently, we have to reject the hypothesis that goats are able to spontaneously recognise familiar and unfamiliar con- and heterospecifics when being presented with their faces as two-dimensional images (H3). There are several possible reasons, of varying likelihood, that might explain this finding. One possibility is that the subjects were simply not able to differentiate between familiar and unfamiliar individuals because they did not form the concept of familiar or unfamiliar individuals associated with social recognition in general. Alternatively, visual head cues alone might not be sufficient for goats to form these categories. Keil et al. (2012) even found that goats don’t necessarily need to see a conspecific’s head to discriminate between group members and goats from another social group. In contrast to this, results from other ruminants, such as cattle (Coulon et al., 2011) and sheep (Peirce et al., 2000, 2001), have shown that a set of ruminant species have the capability to form this concept using two-dimensional head cues in a visual discrimination task. Langbein et al. (2023) also found some evidence that goats are able to associate two-dimensional representations of conspecifics with real animals in a visual discrimination task. It is therefore surprising to see that the subjects in our study did not show differential looking behaviour with respect to the familiarity of the individuals presented. It might also be possible that subjects were indeed able to differentiate between the categories of stimulus familiarity, but had the same level of motivation (but for different reasons) to pay close attention to both categories, resulting in similar looking durations. The different reasons for looking at either familiar or unfamiliar con- or heterospecifics (e.g. novelty (Fantz, 1964; Tulving & Kroll, 1995), threat perception, individual recognition, positive associations or social buffering (for a more detailed discussion see Rault, 2012)) might therefore have compensated for each other and could, ultimately, have led to the absence of a visual preference for a specific category in this study. This assumption also seems plausible when considering the results of Demaria & Thierry (1988), who presented both images of familiar and unfamiliar conspecifics to stump-tailed macaques. They did not find a difference in the looking durations at both stimulus categories but did observe that when looking at the image of a familiar conspecific, some subjects turned back to look at the social group to which the stimulus macaque belonged to. This pattern was never observed for unfamiliar conspecifics, which might indicate that the subjects did indeed distinguish between familiar and unfamiliar individuals. However, this capability could not be inferred from the looking durations at the images per se as they also showed no preference for any of the categories.

We did not find statistical support for an association between the presented stimulus species or the familiarity of the depicted individuals and the amount of time spent with the ears in a specific position. A higher percentage of the ears in a forward position might be associated with situations that lead to high arousal and/or increased attention in goats (Briefer et al., 2015; Bellegarde et al., 2017). Thus, it seemed probable that the subjects in our study would show a higher percentage of ears in a forward position when being presented with the stimulus species that they looked longer at (here, goat faces). We can only speculate as to why this was not the case in our study. One possibility could be that the “ear forward position”, as well as the “ears backward position”, is not solely associated with the level of arousal or attention in goats, but also with the valence of the situation experienced by the animal (Briefer et al., 2015; Bellegarde et al., 2017). As we cannot safely infer from our looking duration data that subjects actually perceived the two-dimensional images of the stimulus subjects as representations of their real, three-dimensional counterparts, we cannot make good assumptions about the particular levels of valence and arousal that our stimuli might have elicited in our focal subjects, making a comparison problematic. It is also possible that the 2D images presented as stimuli did not evoke arousal strong enough to make the ear position a good behavioural parameter. Therefore, the ear position during stimulus presentation does not seem to be an appropriate parameter for testing the attention of goats in our looking time paradigm.

This study has shown that looking time paradigms can be used to test discrimination abilities and visual preferences in goats, provided that the results are interpreted with caution. Thus, it lays the foundation for the work on related research questions using this methodology. As this study was only partly able to demonstrate social visual preferences in goats, further studies are needed to identify the factors that dominantly direct the attention of goats. Therefore, different social visual stimuli other than solely head cues could be used, e.g. full body images of a con- or heterospecific or even videos. In addition, different sensory modalities could be addressed, e.g. by pairing visual with acoustic or olfactory cues. Such a cross-modal approach could provide subjects with a more holistic, yet highly controlled, representation of other individuals. Assessing the social relationships between the subjects in their home environment, such as dominance rank or the distribution of affiliative interactions, could carry additional information when explaining potential biases or preferences in subjects’ looking duration and should be considered in future studies. Finally, a more diverse study population (larger age range, more than one sex tested, etc.) will help to make more generalizable statements about social visual preferences in goats. Further looking time paradigm studies in goats should not only focus on their behavioural responses to specific stimuli, but should also consider adding the measurement of physiological parameters that indicate stress. For example, measuring the heart rate or heart rate variability (e.g. Langbein et al., 2004) or the concentration of cortisol (Da Costa et al., 2004) could help to obtain a more comprehensive picture of how goats perceive specific 2D stimuli. In terms of technical advances, eye-tracking could also be considered to provide more accurate estimates of visual attention in focal subjects (Shepherd & Platt, 2008; Völter & Huber, 2021; e.g. Gao et al., 2022). In the future, this looking time approach could be also used to assess the interplay between cognition and emotions, e.g. to assess attention biases associated with the affective state of an animal (Crump et al., 2018). Given that appropriate stimuli can be identified, an automatised looking time paradigm would offer an efficient approach to assess husbandry conditions, not only experimentally, but also on-farm.

Conclusion

The looking time paradigm presented here appears to be generally suitable for testing visual preferences in dwarf goats, while assessing the concept of familiarity may require better controls for confounding factors to disentangle the different motivational factors associated with the presented stimuli. Goats showed a visual preference for conspecifics when discriminating between two-dimensional images of goats and humans. This is consistent with previous findings in macaques (Fujita, 1987; Demaria & Thierry, 1988) and sheep (Kendrick et al., 1995). In contrast to previous research in a variety of species (e.g. great apes: Leinwand et al., 2022; capuchin monkeys: Pokorny & de Waal, 2009; cattle: Coulon et al., 2011; horses: Lansade et al., 2020; sheep: Peirce et al., 2001), we found no attentional differences when goats were presented with two-dimensional images of familiar and unfamiliar individuals which calls into question the comparability of results obtained with different experimental designs.

Acknowledgements

We would like to thank the staff of the Experimental Animal Facility Pygmy Goat at the Research Institute for Farm Animal Biology in Dummerstorf, Germany, for taking care of the animals. Special thanks go to Michael Seehaus for technical support. Preprint version 4 of this article has been peer-reviewed and recommended by Peer Community In Animal Science (https://doi.org/10.24072/pci.animsci.100267; Veissier, 2024).

Funding

The authors declare that they have received no specific funding for this study.

Conflict of interest disclosure

Christian Nawroth is recommender of PCI Animal Science. The authors declare that they comply with the PCI rule of having no financial conflicts of interest in relation to the content of the article.

Data, scripts, code, and supplementary information availability

Data, scripts and code are available online (https://doi.org/10.17605/OSF.IO/NEPWU, Deutsch et al., 2023).

CC-BY 4.0

CC-BY 4.0